Our Nuclear Future: Facts, Dangers, and Opportunities, by Edward Teller and Albert L. Latter (Criterion Books, New York, 1958), page 139:

"It is generally believed that the First World War was caused by an arms race. For some strange reason most people forget that the Second World War was brought about by a situation which could be called a race in disarmament. The peace-loving and powerful nations divested themselves of their military power. When the Nazi regime in Germany adopted a program of rapid preparation for war, the rest of the world was caught unawares. At first they did not want to accept the fact of this menace. When the danger was unmistakable, it was too late to avert a most cruel war, and almost too late to stop Hitler short of world conquest."

Above: 9.3 megatons Hardtack-Poplar fireball in 1958. This photo has only been recently released with the name of the test. Maybe the proximity of the aircraft (which survived) creates the wrong (not so doomsday-like) impression?

Above: a different regime of nuclear effects phenomena. Colour photos now available of the Teak fireball and surrounding red shock wave air glow. The bomb was 3.8 Mt (50% fission yield fraction) detonated at 77 km altitude nearly over Johnston Island, and was photographed in 1958 from a mountain top on Maui, 794 nautical miles away. As we mentioned in a previous post, Teller and Latter related the case of the Plumbbob-John air burst of 18 July 1957, where five men stood at ground zero (directly below the rocket carried bomb burst) without injury (although they were not looking directly at the fireball at zero time, or they would have received retinal burns). Teak was a similar case: proving that nuclear weapons can be used (for instance as high altitude bursts to destroy incoming missiles) without hazards, if they are designed to minimize prompt gamma ray output and thus EMP radiation (this can be done by the use of clean nuclear weapons with suitable tamper materials that will minimize the high-energy secondary gamma ray yield when hit by neutrons).

Teller and Latter explain that radiological warfare is a benefit compared to the carnage of using conventional weapons

"The lifetime of the radioactive material may be long enough to give an opportunity to the people to escape from the contaminated area [longer half lives mean that the chance of a radioactive atom decaying in any given second is lower, so the specific activity is lower; e.g. if you have N radioactive atoms with a half life of T time units, then the decay rate is simply (lne2)*N/T ~ 0.693N/T atoms decaying per unit time, thus the longer the half-life T the lower the radioactivity level that a given number of radioactive atoms produces, where 1/(lne2) ~ 1.44 which is the factor by which you must multiply the half-life to get the statistical mean life, defined as the time to zero activity if the initial straight-line asymptotic gradient of the decay curve, i.e. exp(-AT) ~ 1 - AT, were followed instead of the exponential curve which of course is itself just a mathematical idealization because it can never reach zero, despite the quantum reality check that in the real world some day the final radioactive atom will decay, and zero activity will be attained after a finite time]. At the same time, one may precipitate almost all the activity near the explosion [using shallow underground detonations produced by earth penetrator warheads, like Redwing-Seminole 13.7 kt shot surface burst inside a water tank at Eniwetok Atoll in 1956 to simulate shallow burial] so that distant localities would not be seriously affected. It is conceivable, therefore, that radiological warfare could be used in a humane manner. By exploding a weapon of this kind near an island one might be able to force evacuation without loss of life. No instrument, not even a weapon, is evil in itself. Everything depends on the way in which it is used."

- Edward Teller and Albert L. Latter, Our Nuclear Future: Facts, Dangers, and Opportunities (Criterion Books, New York, 1958), p. 136.

Above: Redwing-Seminole 13.7 kt shot inside a water tank at Eniwetok Atoll in 1956 to simulate shallow burial. The Wilson cloud shields much of the thermal radiation, while the enhanced cratering action deposits almost all of the radioactivity in the local fallout, seen here as the throwout from the crater. Teller and Latter explain how this kind of radiological warfare could make enemy forces evacuate an island like Iwo Jima (where the island had to be shelled with conventional weapons and flame-throwers, resulting in the death of 21,703 of the 22,786 Japanese soldiers, and the death of 6,825 allied soldiers) before receiving a lethal radiation dose, without any of the immoral carnage of shelling or other gross effects from conventional weapons. Another moral use of nuclear weapons that circumvents the carnage of conventional warfare is air bursts at altitudes just over the maximum fireball radius, to clear the conventional weapons defending coastal areas and beaches prior to an invasion such as the D-day landings: neutron-induced activity covers only a small area and the dose rates are relatively low once the aluminium-28 has decayed with a half-life of only 2.3 minutes.

Sr-90 exaggerations

Teller and Latter also explain how the threat from strontium-90 is grossly exaggerated. Sr-90 is more important than the equally long-lived Cs-137 because Cs-137 like potassium resides in tissues whose cells are regularly renewed and thus is rapidly eliminated from the body, whereas a small fraction of Sr-90 ends up in the bones for life, creating a larger dose. (The I-131 problem and its countermeasures was discussed in detail earlier in the blog post linked here.) Once the fallout comes down, there is a brief spell of danger while the fallout particles are physically present on the leaves and stems of crops, but this can be washed off and wind and rain soon wash the fallout particles into the soil where root uptake is important for the soluble component of the fallout activity. In coral soil or limestone based soil there is an abundance of calcium (coral is calcium carbonate) so chemically similar strontium gets crowded out and diluted.

In most American soils, however, there is less calcium, so with an average natural strontium to calcium mass abundance of 1:100, there is only about 27 kg of soluble natural strontium per acre. Adult humans have a natural strontium to calcium mass ratio of just 1:1,400 and contain only 0.7 gram of natural strontium. Hence, strontium uptake via the food chain from soil to human beings is discriminated against (relative to calcium) by the factor 14. These figures allow the dilution of strontium-90 to be calculated. Each step of the food chain discriminates against strontium relative to calcium (see also pages 1521-9 of the U.S. Congressional Hearings The Nature of Radioactive Fallout and Its Effects on Man, May-June 1957, which states on page 1529: "100 metres [depth] of sea water has 370 grams of dissolved calcium per square foot compared to the average of 20 grams per square foot for the top 2.5 inches of soil which absorbs and holds the fallout radiostrontium"):

(1) Soil: 1 g of Sr for every 100 g of Ca (protection factor = 1)

(2) Plants: 1 g of Sr for every 140 g of Ca (protection factor = 140/100 = 1.4)

(3) Milk: 1 g of Sr for every 980 g of Ca (protection factor = 980/100 = 9.8 for root uptake of soluble Sr in soil by grass, or 980/140 = 7 for Sr ingestion by cattle from fresh fallout particles still adhering directly to the grass)

(4) Human: 1 g of Sr for every 1,400 g of Ca (protection factor of 1400/100 = 14 for fallout in the soil, or 1400/140 = 10 for fallout on plants which are ingested by cattle)

J. L. Kulp's report "Sr-90 in Man" published in Science, 8 February 1957, vol. 125, p. 219, showed that in 1955 the average diet for the human population of the United States contained 7 micro-microcuries of Sr-90 per gram of calcium. It also reported an average worldwide total body burden of 0.12 micro-microcuries per gram of skeletal calcium, and a concentration in young children 3-4 times higher (due to growing bones and thus greater calcium intake from drinking milk).

In 1996, half a century after the nuclear detonations, data on cancers from the Hiroshima and Nagasaki survivors was published by D. A. Pierce et al. of the Radiation Effects Research Foundation, RERF (Radiation Research vol. 146 pp. 1-27; Science vol. 272, pp. 632-3) for 86,572 survivors, of whom 60% had received bomb doses of over 5 mSv (or 500 millirem in old units) suffering 4,741 cancers of which only 420 were due to radiation, consisting of 85 leukemias and 335 solid cancers.

‘Today we have a population of 2,383 [radium dial painter] cases for whom we have reliable body content measurements. . . . All 64 bone sarcoma [cancer] cases occurred in the 264 cases with more than 10 Gy [1,000 rads], while no sarcomas appeared in the 2,119 radium cases with less than 10 Gy.’

- Dr Robert Rowland, Director of the Center for Human Radiobiology, Bone Sarcoma in Humans Induced by Radium: A Threshold Response?, Proceedings of the 27th Annual Meeting, European Society for Radiation Biology, Radioprotection colloquies, Vol. 32CI (1997), pp. 331-8.

Zbigniew Jaworowski, 'Radiation Risk and Ethics: Health Hazards, Prevention Costs, and Radiophobia', Physics Today, April 2000, pp. 89-90:

‘... it is important to note that, given the effects of a few seconds of irradiation at Hiroshima and Nagasaki in 1945, a threshold near 200 mSv may be expected for leukemia and some solid tumors. [Sources: UNSCEAR, Sources and Effects of Ionizing Radiation, New York, 1994; W. F. Heidenreich, et al., Radiat. Environ. Biophys., vol. 36 (1999), p. 205; and B. L. Cohen, Radiat. Res., vol. 149 (1998), p. 525.] For a protracted lifetime natural exposure, a threshold may be set at a level of several thousand millisieverts for malignancies, of 10 grays for radium-226 in bones, and probably about 1.5-2.0 Gy for lung cancer after x-ray and gamma irradiation. [Sources: G. Jaikrishan, et al., Radiation Research, vol. 152 (1999), p. S149 (for natural exposure); R. D. Evans, Health Physics, vol. 27 (1974), p. 497 (for radium-226); H. H. Rossi and M. Zaider, Radiat. Environ. Biophys., vol. 36 (1997), p. 85 (for radiogenic lung cancer).] The hormetic effects, such as a decreased cancer incidence at low doses and increased longevity, may be used as a guide for estimating practical thresholds and for setting standards. ...

‘Though about a hundred of the million daily spontaneous DNA damages per cell remain unrepaired or misrepaired, apoptosis, differentiation, necrosis, cell cycle regulation, intercellular interactions, and the immune system remove about 99% of the altered cells. [Source: R. D. Stewart, Radiation Research, vol. 152 (1999), p. 101.] ...

‘[Due to the Chernobyl nuclear accident in 1986] as of 1998 (according to UNSCEAR), a total of 1,791 thyroid cancers in children had been registered. About 93% of the youngsters have a prospect of full recovery. [Source: C. R. Moir and R. L. Telander, Seminars in Pediatric Surgery, vol. 3 (1994), p. 182.] ... The highest average thyroid doses in children (177 mGy) were accumulated in the Gomel region of Belarus. The highest incidence of thyroid cancer (17.9 cases per 100,000 children) occurred there in 1995, which means that the rate had increased by a factor of about 25 since 1987.

‘This rate increase was probably a result of improved screening [not radiation!]. Even then, the incidence rate for occult thyroid cancers was still a thousand times lower than it was for occult thyroid cancers in nonexposed populations (in the US, for example, the rate is 13,000 per 100,000 persons, and in Finland it is 35,600 per 100,000 persons). Thus, given the prospect of improved diagnostics, there is an enormous potential for detecting yet more [fictitious] "excess" thyroid cancers. In a study in the US that was performed during the period of active screening in 1974-79, it was determined that the incidence rate of malignant and other thyroid nodules was greater by 21-fold than it had been in the pre-1974 period. [Source: Z. Jaworowski, 21st Century Science and Technology, vol. 11 (1998), issue 1, p. 14.]’

W. L. Chen, Y. C. Luan, M. C. Shieh, S. T. Chen, H. T. Kung, K. L. Soong, Y. C. Yeh, T. S. Chou, S. H. Mong, J. T. Wu, C. P. Sun, W. P. Deng, M. F. Wu, and M. L. Shen, ‘Is Chronic Radiation an Effective Prophylaxis Against Cancer?’, published in the Journal of American Physicians and Surgeons, Vol. 9, No. 1, Spring 2004, page 6, available in PDF format here:

‘An extraordinary incident occurred 20 years ago in Taiwan. Recycled steel, accidentally contaminated with cobalt-60 ([low dose rate, gamma radiation emitter] half-life: 5.3 y), was formed into construction steel for more than 180 buildings, which 10,000 persons occupied for 9 to 20 years. They unknowingly received radiation doses that averaged 0.4 Sv, a collective dose of 4,000 person-Sv. Based on the observed seven cancer deaths, the cancer mortality rate for this population was assessed to be 3.5 per 100,000 person-years. Three children were born with congenital heart malformations, indicating a prevalence rate of 1.5 cases per 1,000 children under age 19.

‘The average spontaneous cancer death rate in the general population of Taiwan over these 20 years is 116 persons per 100,000 person-years. Based upon partial official statistics and hospital experience, the prevalence rate of congenital malformation is 23 cases per 1,000 children. Assuming the age and income distributions of these persons are the same as for the general population, it appears that significant beneficial health effects may be associated with this chronic radiation exposure. ...’

‘Professor Edward Lewis used data from four independent populations exposed to radiation to demonstrate that the incidence of leukemia was linearly related to the accumulated dose of radiation. ... Outspoken scientists, including Linus Pauling, used Lewis’s risk estimate to inform the public about the danger of nuclear fallout by estimating the number of leukemia deaths that would be caused by the test detonations. In May of 1957 Lewis’s analysis of the radiation-induced human leukemia data was published as a lead article in Science magazine. In June he presented it before the Joint Committee on Atomic Energy of the US Congress.’ – Abstract of thesis by Jennifer Caron, Edward Lewis and Radioactive Fallout: the Impact of Caltech Biologists Over Nuclear Weapons Testing in the 1950s and 60s, Caltech, January 2003.

Dr John F. Loutit of the Medical Research Council, Harwell, England, in 1962 wrote a book called Irradiation of Mice and Men (University of Chicago Press, Chicago and London), discrediting the pseudo-science from geneticist Edward Lewis on pages 61, and 78-79:

‘... Mole [R. H. Mole, Brit. J. Radiol., v32, p497, 1959] gave different groups of mice an integrated total of 1,000 r of X-rays over a period of 4 weeks. But the dose-rate - and therefore the radiation-free time between fractions - was varied from 81 r/hour intermittently to 1.3 r/hour continuously. The incidence of leukemia varied from 40 per cent (within 15 months of the start of irradiation) in the first group to 5 per cent in the last compared with 2 per cent incidence in irradiated controls. …

‘What Lewis did, and which I have not copied, was to include in his table another group - spontaneous incidence of leukemia (Brooklyn, N.Y.) - who are taken to have received only natural background radiation throughout life at the very low dose-rate of 0.1-0.2 rad per year: the best estimate is listed as 2 x 10-6 like the others in the table. But the value of 2 x 10-6 was not calculated from the data as for the other groups; it was merely adopted. By its adoption and multiplication with the average age in years of Brooklyners - 33.7 years and radiation dose per year of 0.1-0.2 rad - a mortality rate of 7 to 13 cases per million per year due to background radiation was deduced, or some 10-20 per cent of the observed rate of 65 cases per million per year. ...

‘All these points are very much against the basic hypothesis of Lewis of a linear relation of dose to leukemic effect irrespective of time. Unhappily it is not possible to claim for Lewis’s work as others have done, “It is now possible to calculate - within narrow limits - how many deaths from leukemia will result in any population from an increase in fall-out or other source of radiation” [Leading article in Science, vol. 125, p. 963, 1957]. This is just wishful journalese.

‘The burning questions to me are not what are the numbers of leukemia to be expected from atom bombs or radiotherapy, but what is to be expected from natural background .... Furthermore, to obtain estimates of these, I believe it is wrong to go to [1950s inaccurate, dose rate effect ignoring, data from] atom bombs, where the radiations are qualitatively different [i.e., including effects from neutrons] and, more important, the dose-rate outstandingly different.’

Our Nuclear Future: Facts, Dangers, and Opportunities, by Edward Teller and Albert L. Latter (Criterion Books, New York, 1958):

Page 167:

'If we continue to consume [fossil] fuel at an increasing rate, however, it appears probable that the carbon dioxide content of the atmosphere will become high enough to raise the average temperature of the earth by a few degrees. If this were to happen, the ice caps would melt and the general level of the oceans would rise. Coastal cities like New York and Seattle might be innundated. Thus the industrial revolution using ordinary chemical fuel could be forced to end ... However, it might still be possible to use nuclear fuel.'

Page 147:

'All the energy in that Nevada explosion was not quite sufficient to evaporate the water droplets in a cloud one mile broad, one mile wide, and one mile deep. This is not a very big rain cloud. ... Nuclear explosions are violent enough. But compared to the forces of nature - compared even with the daily release of energy from not particularly stormy weather - all our bombs are puny.'

Above: Dr Zaius in Planet of the Apes simultaneously held religious and scientific positions, leading him to suppress scientific findings which contradicted the religious dogma. You know, like my suppression by Britain's Open University physics department chairman, Professor Russell Stannard, author of books like Science and the Renewal of Belief:

"offering fresh insight into original sin, the trials experienced by Galileo, the problem of pain, the possibility of miracles, the evidence for the resurrection, the credibility of incarnation, and the power of steadfast prayer. By introducing simple analogies, Stannard clears up misunderstandings that have muddied the connections between science and religion, and suggests contributions that the pursuit of physical science can make to theology",

arguing that science should be alloyed with dogma again as a "unification" of physics and religion, as it was in the time of Galileo. Actually, this makes some sense when you recognise that Stannard takes "physics" to include the religious belief in uncheckable pseudoscience: a landscape of 10500 different universes to account for the vast number of possible particle physics theories which can be generated by the 100 or more moduli for the shape of the unobservably small compactification of 6-dimensions assumed to exist in the speculative Calabi-Yau manifold of string theory, as well as other rubbish like Aspect's alleged "experimental evidence" on entanglement via correlation of particle spins:

"In some key Bell experiments, including two of the well-known ones by Alain Aspect, 1981-2, it is only after the subtraction of ‘accidentals’ from the coincidence counts that we get violations of Bell tests. The data adjustment, producing increases of up to 60% in the test statistics, has never been adequately justified. Few published experiments give sufficient information for the reader to make a fair assessment." - http://arxiv.org/PS_cache/quant-ph/pdf/9903/9903066v2.pdf

"The quantum collapse [in the mainstream interpretation of quantum mechanics, where a wavefunction collapse occurs whenever a measurement of a particle is made] occurs when we model the wave moving according to Schroedinger (time-dependent) and then, suddenly at the time of interaction we require it to be in an eigenstate and hence to also be a solution of Schroedinger (time-independent). The collapse of the wave function is due to a discontinuity in the equations used to model the physics, it is not inherent in the physics." - Thomas Love, California State University.

As a physics student with a mechanism for gravity that predicted correctly the cosmological acceleration two years ahead of its discovery, Russell didn't even personally reply but just passed my paper to Dr Bob Lambourne who in 1996 wrote to me that my prediction for quantum gravity and cosmological acceleration was not important because it is not within the metaphysical, non-falsifiable domain of Professor Edward Witten's stringy speculations on 11-dimensional 'M-theory'. In 1986, Professor Russell was awarded the Templeton Project Trust Award for ‘significant contributions to the field of spiritual values; in particular for contributions to greater understanding of science and religion’. So who says the Planet of the Apes story is completely fictional, aside from a little hairiness?

Above: Nova (Linda Harrison) portrayed in 3978 AD, in the 1968 movie Planet of the Apes. A nuclear war destroys 'civilization' leaving beautiful dumb girls like Nova. However, the film is politically correct and adds mutant aggressive apes to earth's survivors to make sure that the nuclear war 'survivors will envy the dead' (as Nikita Khrushchev claimed, quoted in Pravda, 20 July 1963), just as politically correct dogma requires.

Above: another view; maybe the alleged evidence for health benefits like enhanced lifespan and lower cancer rates from low level residual radiation in Hiroshima and Nagasaki contribute to her very healthy appearance?

‘Planet of the Apes’ started out as a Pierre Boulle novel in which a couple discover a bottle containing the story of how humans become dictatorial, slovenly and lazy by using apes as slaves to do their work, until there is a rebellion and an ape revolution reverses the situation. Humans are too cowardly to fight back and submit to the chains of oppression. Apes become the masters of human slaves. The twist at the end of the novel occurs when Boulle reveals that the story in a bottle has not been found by humans but rather by a couple of apes (who have read it with astonishment and dismiss the story just as a silly hoax).

The film, however, is another story and is based on a film script by ‘Twilight Zone’ master Rod Serling and Michael Wilson, and in some ways is a reversal of the underlying politics of Boulle's book (producer Arthur P. Jacobs contacted Pierre Boulle and asked him to take a look at the script; Boulle responded on April 29, 1965 that "he truly did not like the Statue of Liberty ending, feeling that it cheapened the story as a whole, and served as the 'temptation from the Devil'...") Instead of the disaster coming through the pacifist humans refusing to fight against oppression, it instead occurs (in the film) as a result of humans fighting one another with nuclear weapons and destroying the cities of human civilization, giving the apes in jungles the opportunity to take over the planet. However, some parts of Pierre Boulle's original plot are resurrected in the sequels to the 1968 film, where the mechanism by which the apes take over the planet is the use of ape slaves who rebel.

The first film, in the script by Rod Serling, starts with three astronauts taking an 18-month (ship time) journey supposed to cover a distance of 320 light years in 2,000 earth years, at a velocity of 320/2000 = 0.16c. At 16% of light velocity, ship time travels at just [1 – 0.162]1/2 = 0.987 of the rate of earth time, so the ship time passing would be 1974 years, not the 18 months that is claimed in the film. Deep sleep cubicles in the ship are used to keep the astronauts alive with the use of minimal resources during the journey. Serling changed the twist that Boulle used by having the ship hit an asteroid half way into the trip, cracking the plastic cubicle of the female astronaut and causing her to prematurely age and die in her sleep. This causes the computer to automatically abort the mission and turn the ship back towards the earth, which in the screenplay by Serling is discovered when the computer tapes are read later (this episode was omitted from the film). The ship, returning to a grossly altered earth with no surviving runways, crash lands in a lake.

The astronauts discover that on this planet the apes rule dumb, ignorant humans. In the final scene, the twist revealing that the planet is actually the earth (which should have been pretty obvious from the similar gravity, atmosphere, sun, moon, star positions in the sky, and so forth) is done by showing the Statue of Liberty half buried by beach sand. A nuclear war has apparently occurred during the 2,000 years that elapsed. The second film in the series, Beneath the Planet of the Apes, furthers this theme by having the surviving astronaut Taylor (Charlton Heston, appropriately nicknamed ‘Charlie Hero’ off-set by the Chimpanzee actor Roddy McDowell) and beautiful savage girl Nova discover an underground colony of surviving radiation-mutated humans worshipping a cobalt-cased ‘alpha-omega doomsday bomb’. Sublime political message: ‘the survivors in a nuclear war will have to live for thousands of years underground and will be mutants that envy the dead.’ Not exactly the truth about the harmlessness of slowly-decaying (i.e. low dose rate) cobalt fallout (which can simply be swept up and buried long before anyone gets a dangerous dose) compared to the survivable but more dangerous fast-decaying (i.e. high dose rate) fission products:

'Everybody's going to make it if there are enough shovels to go around...Dig a hole, cover it with a couple of doors and then throw three feet of dirt on top. It's the dirt that does it.'

- Thomas K. Jones, Deputy Under Secretary of Defense for Strategic and Theater Nuclear Forces, Research and Engineering, LA Times 16 January 1982.

The apes follow them underground and, after his girlfriend Nova is killed in the fighting, the bitter, love-cheated Charlie Hero decides to destroy the planet in anger, finally succeeding by falling on to the doomsday button which ends the story, just as in Pierre Boulle’s previous film Bridge on the River Kwai the crazy hero falls on the detonator switch when shot, blowing up the bridge. Fortunately the alpha-omega bomb - presumably because it's capable of destroying the whole planet - is the one bomb made which doen’t have a permissive action link and require authority codes and dual key activation to arm, with the key holes too far apart for one person to simultaneously turn both together. After all, you don't want to make such a dangerous bomb very hard to accidentally set off, do you, at least not if you're using it as the ending to a fine film?

This fictional tale, in lieu of the full facts on nuclear weapons effects, helped to cement the myth in popular culture that nuclear weapons are a danger to human civilization, rather than deterring world war.

Fraction of activity in local fallout

One of the interesting things about this 1958 book by Teller and Latter is that it gives details of how the atmospheric Nevada testing tried to minimise local fallout. E.g., on page 98, they claim that if the test is on a 'tower so tall that the fireball cannot touch the surface ... the amount of close-in fallout is reduced from eighty per cent to approximately five per cent.'

However, this figure is misleading! The actual percentage of the gamma activity in local fallout from 30 Nevada tower bursts at heights exceeding 100Wkt1/3 feet (it did not decrease at heights above that, due to the contribution to local fallout from the condensed iron oxides produced by the fireball enveloping the tower material) was 20% of that of a surface burst, not 5%.

This 20% figure comes from Jack C. Greene, et al., Response to DCPA Questions on Fallout, Prepared by the Subcommittee on Fallout, Advisory Committee on Civil Defense of the U.S. National Academy of Sciences, U.S. Defense Civil Preparedness Agency, DCPA Research Report No. 20, November 1973. This report was written by a committee composed of top experts on fallout such as Dr Carl F. Miller who had collected the fallout at Castle and Plumbbob and developed the fallout model used by DCPA, and Dr R. Robert Rapp of RAND Corporation who had analyzed the effect of the toroidal distribution of activity in the mushroom clouds of Bravo and Zuni upon the fallout pattern.

The proportion of activity in local fallout depends on which nuclides you are considering, so it is a different number for gamma and beta activity and for different times after burst. If you quote the percentage of unfractionated activities (like Zr-95) in local fallout, that is much larger than the percentage of the fractionated I-131, Cs-137, Sr-89 and Sr-90 in local fallout. Most of the fractionated nuclide decay chains have somewhat different volatilities, so they fractionate to different degrees. Therefore, there is no natural way to define what is meant by the fraction of activity that comes down in local fallout. One artificial way to define it is to consider the local fallout fraction as the gamma exposure rate normalized to 1 hour after burst an integrated over the area of the local fallout pattern. This includes fractionation to the extent that it reduces the average gamma exposure rate at the reference time of one hour after burst.

On page 3 they note that the radiation level at a fixed time after burst from a unit mass of fallout per unit area increases as the particle size decreases, e.g. the radiation level for a given deposition density at a fixed time after burst actually increases as you move further downwind from ground zero:

‘This observation is consistent with the consensus that radiochemical fractionation causes this ration to decrease with increasing particle size.’

In other words, the value of the ratio (R/hr at 1 hour)/(fission kiloton/square mile) is smaller for highly fractionated close-in fallout (which is depleted in volatile fission products) than it is for the unfractionated and enriched fallout deposited at great distances:

‘This problem has been customarily circumvented by using what amounts to an average of this ratio over the region of “local” fallout, where “local” was defined at the convenience of the author.’

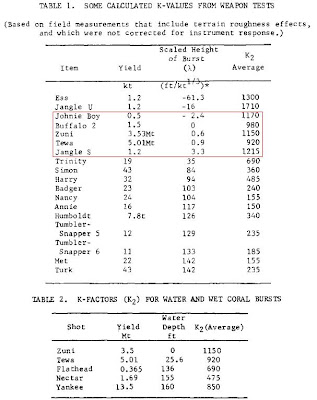

They denote the average “local” fallout (R/hr at 1 hour)/(fission kiloton/square mile) ratio as K1, while the unfractionated fission product value is K0, so K1/K0 = fraction of activity in local fallout.

K1 is reduced by 25% due to instrument response to multidirectional gamma rays from fallout when calibrated using point sources. The batteries in the instrument partly shield the detector from gamma rays coming from certain directions, and the partial shielding of the instrument by the body of the person holding the instrument is also important for fallout measurements. It is also reduced by about 25% due to terrain shielding of direct gamma rays from fallout that collects in small hollows (microrelief) on the ground. Hence, the actual measured ratio, K2 = 0.77x0.75K1 = 0.56K1.

‘Local fallout’ has been defined in three different ways by different people, causing confusion over how to average K1. One way is to define local fallout according as fallout larger than a particular fallout grain size, another way is to define it as radiation levels greater than a particular dose rate at a given time after detonation, and a third way is to define it as the fallout deposited within a certain period of time, such as 24 hours after detonation.

Page 4 states that the best surface burst data is for 0.5 kt Johnie Boy (1170), 1.5 kt Buffalo-2 (980), 3.53 Mt Zuni (1150), 5.01 Mt Tewa (920), and 1.2 kt Sugar (1215), giving a mean of 1090 for K2 and 1930 for K1.

P. 8 states that the average K2 for 30 Nevada steel tower tests with tower heights (scaled by cube-root of yield to 1 kt) of 100 ft or more (due to the steel of the tower the fallout did not diminish below this value) is 220 (R/hr at 1 hour)/(fission kiloton/square mile), while for 40 air bursts at similar scaled altitudes, the mean is K2 = 25 (R/hr at 1 hour)/(fission kiloton/square mile).

Hence, high tower shots produce 100*220/1090 = 20% of the local fallout gamma dose rates of surface bursts, while free air bursts at heights above the fireball radius produced only 100*25/1090 = 2.3% of the fallout of surface bursts.

The Trinity result of K2 = 690 for 37Wkt1/3 feet steel tower burst is 100*690/1090 = 63% of the fallout of a surface burst and is equivalent to a 1 Mt detonation on a 30 storey steel framed building.

On p. 13, after investigating the local fallout fractions from Pacific surface bursts on coral islands, reefs and on the ocean water surface, they concluded that the type of surface did not have a substantial effect on the measured amount of local fallout produced by nuclear surface bursts.

On p. 17, after observing that iodine in fallout is highly fractionated since volatile and condenses late in the fireball history on to the surfaces of the remaining small particles (i.e., it is depleted from the local close-in fallout), they explain that the Japanese fishermen exposed to Bravo fallout on 1 March 1954 just north of Rongelap Atoll were found to have 7 times as much external gamma radiation exposure as thyroid iodine exposure.

In the July 1962 104 kt Sedan test in Nevada, a man who was exposed in the open to the base surge without any protection received a thyroid gland dose due only slightly higher than his external gamma exposure. Three air samplers determined that no more than 10% of the iodine in the Sedan fallout was present as a vapour during the cloud passage; i.e., 90% or more of the iodine was fixed in the silicate Sedan fallout and was unable to evaporate from the fallout particles to give a soluble vapour.

P. 19: ‘There is evidence that much if not all heavy fallout observed during atmospheric nuclear tests was visible as individual particles falling and striking objects, or as deposits ... the forehead will feel like sandpaper to the touch of the hand. The gritty sensation will also be felt on the hands and on bared arms. ... Probably you do not have a radiation-measuring instrument (if you do you can work outside until the instrument reads 0.5 R/hr), but heavy fallout can still be detected by one of these several clues: Seeing fallout particles, fine, soil-coloured, some fused, bouncing upon or hitting a solid object, particularly visible on shining surfaces such as the hood or top of a car or truck. ... Feeling particles striking the nose or forehead ... In the rain, after turning on the windshield wiper of your car, seeing fallout particles in raindrops slide downward on the glass and pile up at the edge of the wiper stroke, like dust or snow.’

P. 20: ‘Typical specific activities of fallout particles are 5 x 1014 fissions/gram of fallout; thus for each R/hr at 1 hour exposure rate produced, 5 milligrams of particles would be deposited per sq ft of area.’ For a minimal sickness gamma dose of 150 R over a week outdoor, 50 R/hr at 1 hour would be needed, requiring 0.25 gram per square foot of fallout to be deposited at 1 hour, which is readily visible on surfaces.

P. 27: Dr Timothy Fohl and A. D. Ealay of Mt. Auburn Research Associates (MARA) used a buoyant vortex fireball in their 1972 report Vortex Ring Model of Single and Multiple Cloud Rise, DNA-2945F, to model to simulate the effect of two simultaneous 13.5 Mt nuclear surface bursts. If they are detonated within 5 fireball diameters of each other, they merge while rising into a single cloud which reaches only 66% of the altitude reached by an individual detonation.

Going back to the Teller and Latter book, their figure of 5% for high tower shots roughly applies to the fractionated I-131, Cs-137, Sr-90 and Sr-89 in local fallout, rather than to the mixture of unfractionated and fractionated activities which give rise to the total gamma radiation field from local fallout. On page 99 they state:

'In the case ... where the fireball almost touches the ground, the close-in fallout is also only about five percent [actually, as we saw above, for 40 free air bursts where the fireball did not touch the ground, it was only 2.3% of the fallout gamma activity of surface bursts]. This is a somewhat surprising fact since in this case photographs show large quantities of surface material being sucked up into the cloud, just as they are in a true surface explosion.

'This material certainly consists of large, heavy dirt particles which subsequently fall out of the cloud. Yet most of them somehow fail to come in contact with the radioactive fission products.

'This peculiar phenomenon can be understood by looking at the details of how the fireball rises. At first the central part of the fireball is much hotter than the outer part and thus it rises more rapidly. As it rises, however, it cools and falls back around the outer part, creating in this way a doughnut-shaped structure. The whole process is analogous to the formation of an ordinary smoke ring.

'In most of the photographs one sees, the doughnut is obscured by the cloud of water that forms, but sometimes when the weather is particularly dry, it becomes perfectly visible. During the rather orderly circulation of air through the hole, the bomb debris and the dirt that has been sucked up remain separated.'

Above: toroidal circulation in the 1953 Climax test: dust passes up through the middle of the toroid without mixing with the ring shaped fireball, then it cools as it hits cold air at the top, causing it to cascade back around the outside of the fireball. Result: harmless, non-radioactive fallout of dust which has never come into contact with the radioactive toroidal shaped fireball (a ring doughnut shape with a hollow in the middle.

Above: toroidal fireball in the 1953 Grable nuclear air burst.

Above: photos taken at 17, 27 and about 50 seconds after the French nuclear test Licorne (a 914 kt balloon suspended shot, at 500 m altitude on 3 July 1970). The fireball thermal radiation is initially shielded by the expanding Wilson condensation cloud, which forms in humid atmosphere the low pressure, cooling air in the negative pressure blast phase (some distance behind behind the ever expanding compressed shock front). Edward Teller and Albert Latter clearly describe the scientific phenomena of the white 'skirt' surrounding the mushroom stem for bursts in humid air, on page 84 of their 1958 book Our Nuclear Future:

'It is actually a cloud: a collection of droplets of water too small to turn into rain but big enough to reflect the white light of the sun. ... The white skirts (which are not always present) do not consist of any material that is falling out of the cloud. On the contrary, a moist layer of air is sucked up into the cloud from the side and the droplets which form in this layer give rise to a cloud-sheet with the appearance of a skirt.'

Above: the lethal global fallout fallacy started with the 1949 book by David Bradley, No Place to Hide, which grossly exaggerated the Crossroads-BAKER fallout.

The effects of small doses of plutonium were falsely claimed to be harmful using metaphysical linear extrapolation from high dose radium effects, in lieu of actual data for low doses. When eventually in the 1970s and 1980s the detailed dosimetry for thousands of early radium dial painters was done (by exhuming the corpses and actually measuring the radium in the bones), in was discovered that alpha radiation effects internally were a threshold effect requiring a minimum of 1,000 rads or 10 Gy, so the linear dose-effects theory was bunk:

‘Today we have a population of 2,383 [radium dial painter] cases for whom we have reliable body content measurements. . . . All 64 bone sarcoma [cancer] cases occurred in the 264 cases with more than 10 Gy [1,000 rads], while no sarcomas appeared in the 2,119 radium cases with less than 10 Gy.’

- Dr Robert Rowland, Director of the Center for Human Radiobiology, Bone Sarcoma in Humans Induced by Radium: A Threshold Response?, Proceedings of the 27th Annual Meeting, European Society for Radiation Biology, Radioprotection colloquies, Vol. 32CI (1997), pp. 331-8.

DCPA Attack Environment Manual -